Predicting Outcomes

The next step in decision structuring is to predict potential outcomes under each alternative, filling in the cells of the consequence table (Table 10.1). By design, social values have no direct role in this part of the decision-making process. The emphasis is on making robust predictions to provide the basis for selecting a preferred management option. This is what is meant by evidence-based decision making.

The task of predicting outcomes usually requires specialized knowledge. In some cases, the required expertise may be held by members of the planning team. In other cases, planners may solicit input from relevant experts on an ad hoc basis. For complex decisions, a dedicated technical team may be established to work alongside the planning team.

The foremost concern when making predictions is reliability. Good decisions require good information. In the past, a science-based approach was seen as necessary and sufficient. In recent years, there has been a trend toward also incorporating local knowledge held by individuals living or working in the planning area (Failing et al. 2007). This includes the traditional ecological knowledge of Indigenous people as well as knowledge held by resource companies, local residents, hunters, anglers, trappers, and so forth.

The rationale for incorporating local knowledge is that it can fill gaps in scientific knowledge, leading to better predictions (Failing et al. 2007). There is also a social dimension. Stakeholders are unlikely to support a decision if their views about potential management outcomes are dismissed out of hand.

The challenge for decision makers is that accepting all sources of information uncritically is inconsistent with the aim of generating predictions that are as reliable as possible. The solution is to be inclusive when assembling information and then select the best of what is available using pre-established criteria concerning reliability and utility. The selection criteria can be expressed as a series of questions:

- For observations, what steps have been taken to minimize observer bias, measurement error, and the role of chance?

- For conclusions, are the inferences supported by factual data? What steps have been taken to minimize bias and errors in logic?

- Is the information quantitative or qualitative? How much detail is provided?

- How appropriate is the spatial and temporal scope of the information relative to the proposed application?

- What type of vetting process has the information been subjected to?

- Has the information been organized and summarized or is it in raw form?

In controversial cases, competing sources of information can be treated as alternate hypotheses and explored in tandem. This can be considered a form of sensitivity testing, which we will discuss below.

Modelling Approaches

Humans are adept at making predictions about future events on the basis of past observations and accumulated knowledge, but there are limits to how much information we can mentally store and process. Moreover, our inferences suffer from several well-known mental biases, such as overweighting recent events (Kahneman 2011). A variety of decision support tools have been developed to overcome these limitations and they should be used when time and capacity permit (Addison et al. 2013). We will refer to these tools under the collective heading of modelling approaches.

Two types of models are commonly used to support resource management decisions. First, and most common, are statistical models that mathematically summarize important relationships in the data obtained from observational studies (see Chapter 6). An important benefit of these models is that the reliability of their predictions can be expressed in the form of confidence limits. Their main limitation is that they are inflexible. Statistical models cannot be used to model dynamic processes, nor can they be extended to include new variables.

The second type of model is a process, or “stock and flow,” model. These models track the evolution of selected system components under the influence of one or more driving variables (Box 10.2). The internal mechanisms of process models are usually based on findings from observational studies. In many cases, locally obtained information is lacking, so information from other locations must be used, which adds uncertainty to the model.

When a modelling approach is used, an ongoing dialog between modellers and planners should be established. In the early stages, modellers can help planners understand the system they are working in, and its limitations. This can facilitate the refinement of objectives and management alternatives. In later stages, modellers provide predictions about the performance of management alternatives and convey the uncertainty associated with these predictions.

Information also needs to flow from planners to modellers (Addison et al. 2013). Decision support models are not the same as research models. The intent is to provide decision makers with the information they need to assess and compare the available management options. Modellers should be responsive to the needs and priorities of planners and incorporate local information when appropriate. Furthermore, the model cannot be overly complex or opaque. A “black box” model that must be taken on faith is unlikely to be perceived as legitimate by planners and stakeholders.

When modelling results are presented, a reference point should be included to illustrate their significance. For example, the opportunity costs of protection might be described as a percentage of regional resource revenue instead of just a dollar value. For conservation objectives, it is useful to present results relative to an ecological benchmark or a legal requirement. As a general rule, multiple framings should be provided, with the aim of enhancing understanding.

In some cases, it may be possible to allow the planning team or stakeholders to interact directly with the model in facilitated sessions (see Case Study 5). This can be an efficient way for planners and stakeholders to gain insight into the trade-offs that exist and their options for dealing with them. Facilitation is key for this approach to be effective.

Box 10.2. Constructing a Stock and Flow Model

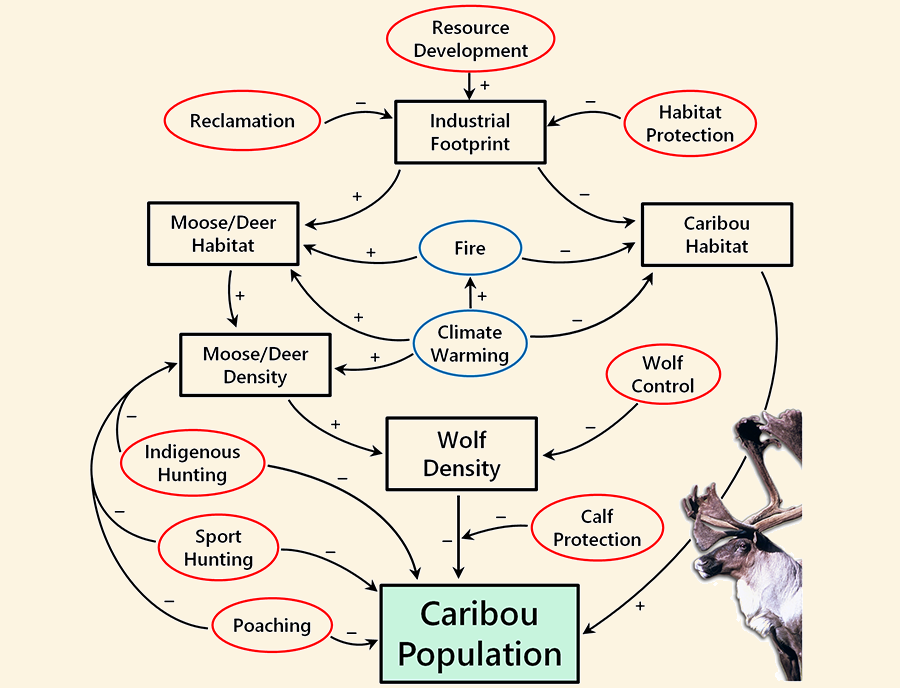

The first step in building a stock and flow model is to create a conceptual diagram that describes the components of the system and their connections. Sometimes the entire system can be captured in a single model. In other cases, individual objectives are modelled separately. Fig. 10.4 presents a conceptual model for a woodland caribou system.

Stock and flow models are named for the two types of components they contain. Stock variables are things that can be counted, like caribou. Flow variables are things that can be measured as rates, like predation. Flow variables are what cause stocks to change over time.

For SDM applications, the outcomes of interest are represented as stock variables, and proposed management actions are represented as flow variables. If any of the management actions act indirectly, then the intervening components also need to be included. For example, in Fig. 10.4, wolf control measures are directed toward wolves, not caribou, so wolf density needs to be included as a stock variable. Wolf density is linked to caribou population size through a flow variable describing the rate of wolf predation.

Additional flow variables may be required to account for the effects of external processes, such as climate change (blue ovals in Fig. 10.4). Processes are considered to be external if they are not amenable to management control within the context of the decision frame. As with other forms of modelling, a balance must be sought between adding detail and maintaining overall tractability. In SDM applications, we are most concerned with processes that alter the relative performance of management alternatives, affecting our determination of which is best.

To use the conceptual model for making predictions, it must be parameterized, which means that values are assigned to all of the variables. Stock variables are initially set to the current state of the system—the starting point of our projections. Flow variables that describe management actions are defined by the management alternative being examined in a given model run. Other flow variables are treated as cause and effect relationships between stock variables and are generally defined using equations derived from empirical research. For flow variables that describe external inputs, values are typically based on past behaviour or the extrapolation of trends.

The last step is to convert the conceptual model into a computer simulation model that can be used to track outcomes. Computers provide the computational power needed to explore complex systems quickly and reliably. Once parameterized with the current state of the system, the simulation model can be used to explore how the system will evolve under alternative management approaches.

Handling Uncertainty

Uncertainty presents a major challenge for decision makers. It is difficult to choose a preferred management alternative when the predictions of performance are unreliable. Therefore, every effort should be made to minimize uncertainty when obtaining data and constructing models. Residual uncertainty should be quantified so that it can be taken into account when decisions are made.

The methods for minimizing uncertainty when conducting observational studies are well developed. These are core elements of the scientific method (see Chapter 4). They include established techniques for designing studies and making observations, as well as statistical methods for generating robust predictions and quantifying the level of uncertainty.

Process models present a greater challenge (McCarthy 2014). Starting parameters are subject to measurement error and the cause-effect relationships among components are often only approximations. Biological systems are complex, and many modelled processes may be only partially understood. Extrapolation error is another problem, arising when information from external study areas is used. Finally, uncertainty arises from what is not in the model. The number of components that can be included is constrained by data availability, modelling capacity, and the need to maintain tractability.

The main tool for handling uncertainty in process models is sensitivity testing (Jackson et al. 2000). This entails running the model across a range of possible parameter estimates for each variable, rather than just using the best estimates. The range of values should include the outer bounds of what can reasonably be expected for each parameter, based on existing knowledge.

The sensitivity testing process usually begins by exploring the sensitivity of one variable at a time, in sequential model runs. Thereafter, logical combinations of variables may be explored together, up to the limit of what is practical. The number of permutations (and model runs) needed for combined sensitivity testing quickly becomes unmanageable when more than a few variables are involved.

It is usually more efficient to begin with a simple model, and then add detail where it is needed to reduce uncertainty, than to begin with a highly complex model. Moreover, only variables that contribute to the discrimination of management alternatives warrant inclusion.

For processes that are very poorly understood, the role of uncertainty can be investigated by asking “what if” questions—another form of scenario analysis (Peterson et al. 2003). Climate change presents an obvious example. There are still so many unknowns and contingencies related to climate change that we cannot treat the rate of warming as just another variable. Instead, we are faced with a number of plausible scenarios, with little guidance as to which is most likely. These scenarios can be investigated to determine how robust the model’s predictions are likely to be, given alternative climate futures.

Further reduction of uncertainty can be achieved through scientific study, which mostly occurs between planning cycles. This is the tie-in to our discussion of policy-relevant research in Chapter 4. The contribution of SDM is that it identifies and focuses attention on the uncertainties that matter most to management. Research and learning can also be integrated into the decision process itself—a topic we will turn to at the end of the chapter.

A core principle of SDM is that it is better to make timely decisions on the basis of the best information available than to wait for better information to arrive (Martin et al. 2009). This is particularly true for biodiversity objectives, where delay in decision making generally equates with continued decline (Gregory et al. 2013).

A related principle is that all decisions should be seen as provisional. As new information comes to light, and as conditions change, the balance among management options may very well change. This is why the SDM process is depicted as a cycle.

Expert Opinion

Many planning initiatives do not have the time or capacity needed for modelling. They rely instead on expert opinion for predicting the outcomes of management actions. Such predictions are usually qualitative rather than quantitative, and there are few options for characterizing the effects of uncertainty. Moreover, there is less transparency concerning the factual basis of the predictions. Nevertheless, this approach is often used because no other options exist.

When predictions are based on expert opinion, it is best to solicit input from a diverse group of experts. This is because specialized knowledge is typically narrowly based. To obtain information that is both detailed and complete, multiple perspectives are needed.

Consideration should also be given to how expert opinions are elicited. A structured approach is best, involving a combination of individual meetings and group workshops (MacMillan and Marshall 2006). The necessary context should be provided, and the questions should be well framed. It should also be made clear that professional opinions are being sought, not value judgments. The role of experts is to help decision makers understand the consequences of management alternatives, not to tell them what to do.

Workshops are particularly useful in that they allow experts to share perspectives and challenge each others’ ideas. The objective is to document areas of agreement and disagreement, rather than to force a consensus. This is how uncertainty can be characterized when modelling is not an option. In addition, experts should be challenged to provide the reasoning underlying their predictions and to express their level of confidence in them.

Box 10.3. The Precautionary Principle

The precautionary principle states that measures to prevent environmental degradation should not be delayed because of a lack of full scientific certainty (UN 1992). The Canadian Species at Risk Act applies the precautionary principle in the context of preventing the loss of wildlife species (GOC 2002). When making resource management trade-off decisions, this principle directs us to err on the side of caution when there is uncertainty about environmental outcomes. The aim is to minimize the risk to biodiversity that such uncertainty poses.

In SDM, the precautionary principle serves as a value position rather than a decision-making rule (Gregory and Long 2009). Prompt management action and the minimization of environmental risk are objectives we would like to achieve to support environmental values. But in most real-world settings, environmental objectives do not automatically override other social objectives. A balance must be sought, which is why SDM is needed.

Traditional Ecological Knowledge

Traditional ecological knowledge (TEK) is another form of expertise that can be used to support decision making. TEK derives from the close association that Indigenous peoples have with the land. It is place-based and multifaceted. Usher (2000) describes four distinct components of TEK:

1. Factual knowledge about the environment, including personal observations, personal generalizations based on life experience, and traditions and teachings passed down from generation to generation. This category ranges from specific observations to explanatory inferences about why things are the way they are.

2. Factual knowledge about past and current use of the environment, particularly as it pertains to the rights and interests of local Indigenous communities.

3. Culturally based value statements about how things should be and ethical statements concerning appropriate behaviour toward animals and the environment.

4. A culturally based worldview that underlies the first three categories, providing the lens through which observation and experience are processed.

TEK informs the value-based positions that Indigenous groups advance as participants in decision-making processes. It can also be used as a form of expert opinion for predicting the outcomes of certain types of management actions. In the latter case, it is necessary to disentangle the value aspects of TEK from the factual aspects. Under SDM, values inform objectives and choices, but predictions of outcomes are to be made as objectively as possible.

The main strength of TEK as a source of information is that it is obtained through an intimate and ongoing relationship between Indigenous people and the land. This permits detailed observations to be made over large areas and over long periods of time, especially about animal behaviour, population sizes, habitat preferences, and animal responses to human activities (Gadgil et al. 1993). In contrast, scientific researchers face capacity constraints that force them to choose between studying small areas intensively or large areas coarsely. Furthermore, few studies last more than a few years.

The main shortcoming of TEK is that the observations of natural phenomena, while numerous, are generally not structured or recorded (Usher 2000). Furthermore, the information is distributed among a large number of observers. Findings may be shared through informal dialog, but are not formally synthesized. This presents a considerable logistical challenge for applying TEK in the context of SDM. Moreover, the reliance on memory and oral communication limits the level of detail that can be obtained and makes it impossible to judge the level of reliability (Usher 2000).

There is a direct parallel here with the challenges of citizen science, discussed in Chapter 4. Naturalists have been recording natural phenomena for centuries. But it was not until people began making observations using smartphone apps (including a photo, time stamp, and GPS location) and uploading their sightings to centralized databases that the full potential of citizen science was realized.

In practice, Indigenous people and researchers generally do not observe the same things over the same time period. Therefore, TEK and scientific knowledge are often complementary. For example, a scientific telemetry-based study of Arctic foxes around Pond Inlet, Nunavut, found that individual foxes use terrestrial and marine habitats throughout the winter. This knowledge was largely inaccessible to local Indigenous people because individual foxes seen at different locations are not easily differentiated (Gagnon and Berteaux 2009). For their part, Inuit elders and hunters had TEK concerning fox diets and behaviour that scientists did not have the time or capacity to collect. A predictive model that combined both sources of information would be superior to one that relied solely on scientific information or TEK alone.

There are, of course, cases when TEK and scientific information are in direct conflict (Stirling and Parkinson 2006). This arises most often in the context of co-management, especially as it pertains to hunted populations (Nadasty 2003; Armitage et al. 2011). In such cases, the criteria we discussed earlier concerning information reliability should be applied. However, there is also a social dimension that cannot be ignored.

When decision making is shared between government and local Indigenous communities, the decision-making process is itself a point of negotiation (Houde 2007). It should not be assumed that SDM and scientific principles will automatically be supported by Indigenous communities. Support has to be developed over time through collective learning and the establishment of trust. This remains a work in progress. In the words of Natcher et al. (2005 p. 241), “co-management has more to do with managing relationships than managing resources.”